If NSight Systems does not capture any kernel launch, even though you have used try starting nsys with -trace cuda. Saving intermediate "report.qdstrm" file to disk.

CUDALAUNCH NVPROF CODE

When you call into code that is wrapped by the profiler will become active and generate a profile output file in the current folder: julia> using CUDA loading Revise so that you can modify your application as you go. You can then execute whatever code you want in the REPL, including e.g.

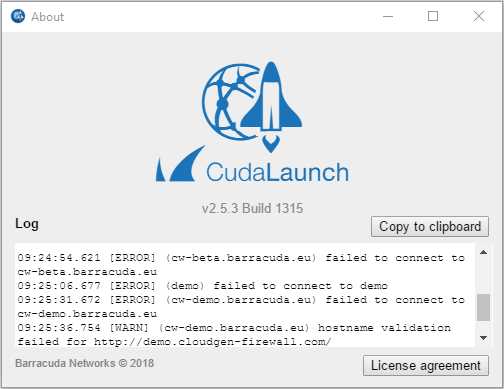

CUDALAUNCH NVPROF INSTALL

The former is well-integrated with the Julia GPU packages, and makes it possible to iteratively profile without having to restart Julia as was the case with nvvp and nvprof.Īfter downloading and installing NSight Systems (a version might have been installed alongside with the CUDA toolkit, but it is recommended to download and install the latest version from the NVIDIA website), you need to launch Julia from the command-line, wrapped by the nsys utility from NSight Systems: $ nsys launch julia NVIDIA Nsight Systemsįollowing the deprecation of above tools, NVIDIA published the Nsight Systems and Nsight Compute tools for respectively timeline profiling and more detailed kernel analysis. Note however that both nvprof and nvvp are deprecated, and will be removed from future versions of the CUDA toolkit.

If nvprof crashes, reporting that the application returned non-zero code 12, try starting nvprof with -openacc-profiling off.įor a visual overview of these results, you can use the NVIDIA Visual Profiler ( nvvp): └ CUDA.Profile ~/Julia/pkg/CUDA/src/profile.jl:42 nvprof and nvvp │ The user is responsible for launching Julia under a CUDA profiler like `nvprof`. ┌ Warning: Calling only informs an external profiler to start.

CUDALAUNCH NVPROF SOFTWARE

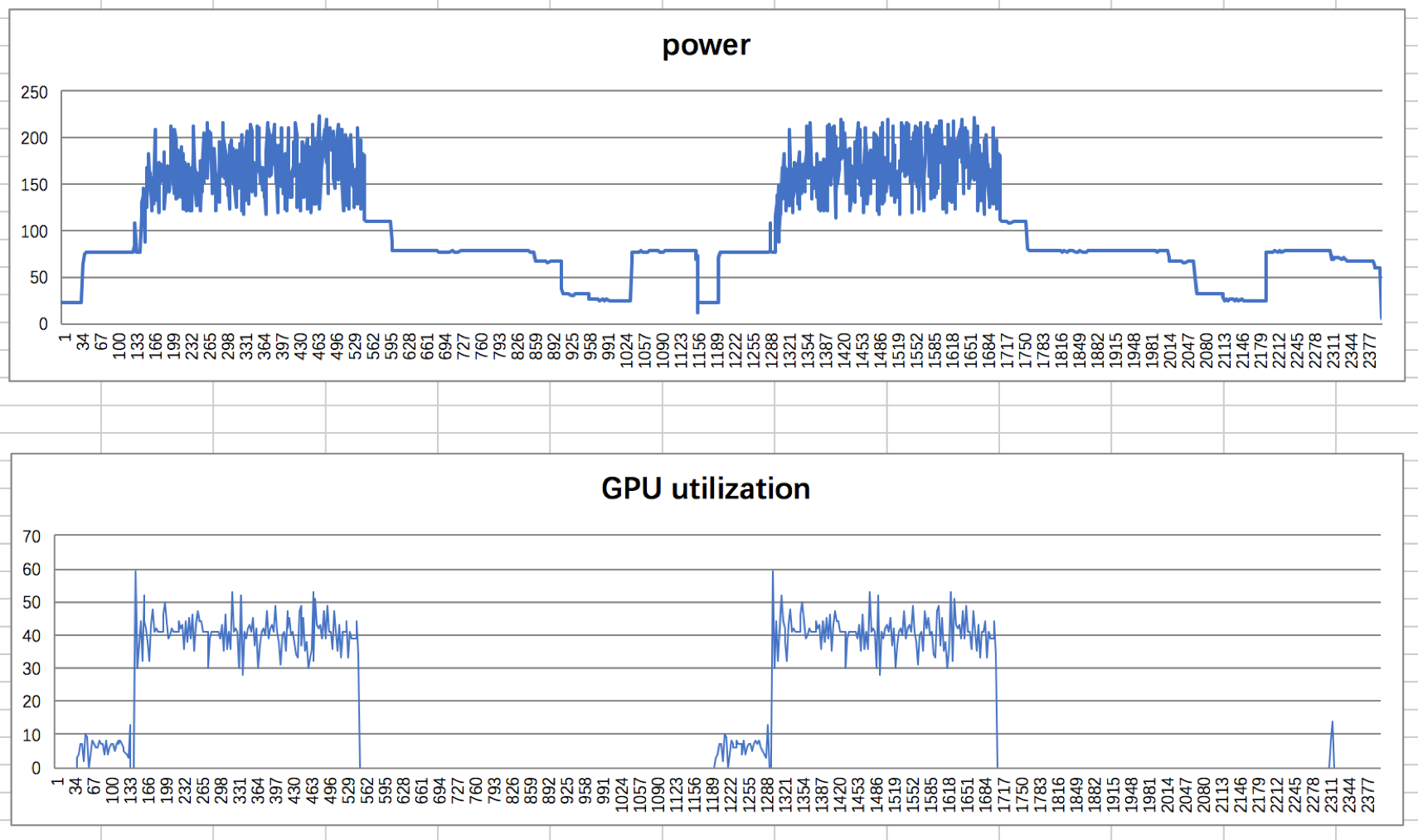

Again, this macro mimics an equivalent from the standard library, but this time requires external software to actually perform the profiling: julia> a = CUDA.rand(1024,1024,1024) To inform those external tools which code needs to be profiled (e.g., to exclude warm-up iterations or other noninteresting elements) you can use the macro to surround interesting code with. Instead, we want a overview of how and when the GPU was active, to avoid times where the device was idle and/or find which kernels needs optimization.Īs we cannot use the Julia profiler for this task, we will be using external profiling software as part of the CUDA toolkit. For the GPU allocation behavior you need to consult Application profilingįor profiling large applications, simple timings are insufficient.

Note that the allocations as reported by BenchmarkTools are CPU allocations. by calling synchronize() or, even better, wrapping your code in julia> a = CUDA.rand(1024,1024,1024) Due to the asynchronous nature of GPUs, you need to ensure the GPU is synchronized at the end of every sample, e.g. The macro is more user-friendly and is a generally more useful tool when measuring the end-to-end performance characteristics of a GPU application.įor robust measurements however, it is advised to use the BenchmarkTools.jl package which goes to great lengths to perform accurate measurements. The macro is mainly useful if your application needs to know about the time it took to complete certain GPU operations.įor more convenient time reporting, you can use the macro which mimics by printing execution times as well as memory allocation stats, while making sure the GPU is idle before starting the measurement, as well as waiting for all asynchronous operations to complete: julia> a = CUDA.rand(1024,1024,1024) Ġ.046063 seconds (96 CPU allocations: 3.750 KiB) (1 GPU allocation: 4.000 GiB, 14.33% gc time of which 99.89% spent allocating) As such, if the GPU was not idle in the first place, you may not get the expected result. This is a low-level utility, and measures time by submitting events to the GPU and measuring the time between them. To accurately measure execution time in the presence of asynchronously-executing kernels, CUDA.jl provides an macro that, much like measures the total execution time of a block of code on the GPU: julia> a = CUDA.rand(1024,1024,1024) CUDA, and the Julia CUDA packages, provide several tools and APIs to remedy this. Furthermore, because the code executes on a different processor, it is much harder to know what is currently executing. For one, kernels typically execute asynchronously, and thus require appropriate synchronization when measuring their execution time. Profiling GPU code is harder than profiling Julia code executing on the CPU.

0 kommentar(er)

0 kommentar(er)